UIMA Tutorial and Developers' Guides

Version 2.4.0

Copyright © 2006, 2011 The Apache Software Foundation

Copyright © 2004, 2006 International Business Machines Corporation

License and Disclaimer. The ASF licenses this documentation to you under the Apache License, Version 2.0 (the "License"); you may not use this documentation except in compliance with the License. You may obtain a copy of the License at

Unless required by applicable law or agreed to in writing, this documentation and its contents are distributed under the License on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License.

Trademarks. All terms mentioned in the text that are known to be trademarks or service marks have been appropriately capitalized. Use of such terms in this book should not be regarded as affecting the validity of the the trademark or service mark.

November, 2011

Table of Contents

- 1. Annotator & AE Developer's Guide

- 2. CPE Developer's Guide

- 3. Application Developer's Guide

- 3.1. The UIMAFramework Class

- 3.2. Using Analysis Engines

- 3.3. Using Collection Processing Engines

- 3.4. Setting Configuration Parameters

- 3.5. Integrating Text Analysis and Search

- 3.6. Working with Remote Services

- 3.7. Increasing performance using parallelism

- 3.8. Monitoring AE Performance using JMX

- 3.9. Performance Tuning Options

- 4. Flow Controller Developer's Guide

- 5. Annotations, Artifacts & Sofas

- 6. Multiple CAS Views

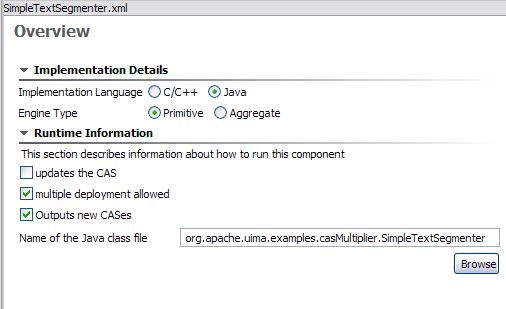

- 7. CAS Multiplier

- 8. XMI & EMF

Chapter 1. Annotator and Analysis Engine Developer's Guide

This chapter describes how to develop UIMA type systems, Annotators and Analysis Engines using the UIMA SDK. It is helpful to read the UIMA Conceptual Overview chapter for a review on these concepts.

An Analysis Engine (AE) is a program that analyzes artifacts (e.g. documents) and infers information from them.

Analysis Engines are constructed from building blocks called Annotators. An annotator is a component that contains analysis logic. Annotators analyze an artifact (for example, a text document) and create additional data (metadata) about that artifact. It is a goal of UIMA that annotators need not be concerned with anything other than their analysis logic – for example the details of their deployment or their interaction with other annotators.

An Analysis Engine (AE) may contain a single annotator (this is referred to as a Primitive AE), or it may be a composition of others and therefore contain multiple annotators (this is referred to as an Aggregate AE). Primitive and aggregate AEs implement the same interface and can be used interchangeably by applications.

Annotators produce their analysis results in the form of typed Feature Structures, which are simply data structures that have a type and a set of (attribute, value) pairs. An annotation is a particular type of Feature Structure that is attached to a region of the artifact being analyzed (a span of text in a document, for example).

For example, an annotator may produce an Annotation over the span of text

President Bush, where the type of the Annotation is

Person and the attribute fullName has the

value George W. Bush, and its position in the artifact is character

position 12 through character position 26.

It is also possible for annotators to record information associated with the entire document rather than a particular span (these are considered Feature Structures but not Annotations).

All feature structures, including annotations, are represented in the UIMA Common Analysis Structure(CAS). The CAS is the central data structure through which all UIMA components communicate. Included with the UIMA SDK is an easy-to-use, native Java interface to the CAS called the JCas. The JCas represents each feature structure as a Java object; the example feature structure from the previous paragraph would be an instance of a Java class Person with getFullName() and setFullName() methods. Though the examples in this guide all use the JCas, it is also possible to directly access the underlying CAS system; for more information see Chapter 4, CAS Reference .

The remainder of this chapter will refer to the analysis of text documents and the creation of annotations that are attached to spans of text in those documents. Keep in mind that the CAS can represent arbitrary types of feature structures, and feature structures can refer to other feature structures. For example, you can use the CAS to represent a parse tree for a document. Also, the artifact that you are analyzing need not be a text document.

This guide is organized as follows:

-

Section 1.1, “Getting Started” is a tutorial with step-by-step instructions for how to develop and test a simple UIMA annotator.

-

Section 1.2, “Configuration and Logging” discusses how to make your UIMA annotator configurable, and how it can write messages to the UIMA log file.

-

Section 1.3, “Building Aggregate Analysis Engines” describes how annotators can be combined into aggregate analysis engines. It also describes how one annotator can make use of the analysis results produced by an annotator that has run previously.

-

Section 1.4, “Other examples” describes several other examples you may find interesting, including

-

SimpleTokenAndSentenceAnnotator – a simple tokenizer and sentence annotator.

-

PersonTitleDBWriterCasConsumer – a sample CAS Consumer which populates a relational database with some annotations. It uses JDBC and in this example, hooks up with the Open Source Apache Derby database.

-

-

Section 1.5, “Additional Topics” describes additional features of the UIMA SDK that may help you in building your own annotators and analysis engines.

-

Section 1.6, “Common Pitfalls” contains some useful guidelines to help you ensure that your annotators will work correctly in any UIMA application.

This guide does not discuss how to build UIMA Applications, which are programs that use Analysis Engines, along with other components, e.g. a search engine, document store, and user interface, to deliver a complete package of functionality to an end-user. For information on application development, see Chapter 3: “Application Developer's Guide” .

1.1. Getting Started

This section is a step-by-step tutorial that will get you started developing UIMA

annotators. All of the files referred to by the examples in this chapter are in the

examples directory of the UIMA SDK. This directory is designed to

be imported into your Eclipse workspace; see Section 3.2, “Setting up Eclipse to view Example Code” for instructions on how to do

this.

See Section 3.4, “Attaching UIMA Javadocs” for how to attach the UIMA

Javadocs to the jar files.

Also you may wish to refer to the UIMA SDK Javadocs located in the docs/api/index.html directory.

Note

In Eclipse 3.1, if you highlight a UIMA class or method defined in the UIMA SDK Javadocs, you can conveniently have Eclipse open the corresponding Javadoc for that class or method in a browser, by pressing Shift + F2.

Note

If you downloaded the source distribution for UIMA, you can attach that as well to the library Jar files; for information on how to do this, see Chapter 1, Javadocs.

The example annotator that we are going to walk through will detect room numbers for rooms where the room numbering scheme follows some simple conventions. In our example, there are two kinds of patterns we want to find; here are some examples, together with their corresponding regular expression patterns:

- Yorktown patterns:

20-001, 31-206, 04-123(Regular Expression Pattern: ##-[0-2]##)

- Hawthorne patterns:

GN-K35, 1S-L07, 4N-B21 (Regular Expression Pattern: [G1-4][NS]-[A-Z]##)

There are several steps to develop and test a simple UIMA annotator.

Define the CAS types that the annotator will use.

Generate the Java classes for these types.

Write the actual annotator Java code.

Create the Analysis Engine descriptor.

Test the annotator.

These steps are discussed in the next sections.

1.1.1. Defining Types

The first step in developing an annotator is to define the CAS Feature Structure

types that it creates. This is done in an XML file called a Type System

Descriptor. UIMA defines basic primitive types such as

Boolean, Byte, Short, Integer, Long, Float, and Double, as well as Arrays of these primitive

types. UIMA also defines the built-in types TOP, which is the root

of the type system, analogous to Object in Java; FSArray, which is

an array of Feature Structures (i.e. an array of instances of TOP); and

Annotation, which we will discuss in more detail in this section.

UIMA includes an Eclipse plug-in that will help you edit Type System Descriptors, so if you are using Eclipse you will not need to worry about the details of the XML syntax. See Chapter 3, Setting up the Eclipse IDE to work with UIMA for instructions on setting up Eclipse and installing the plugin.

The Type System Descriptor for our annotator is located in the file

descriptors/tutorial/ex1/TutorialTypeSystem.xml. (This

and all other examples are located in the examples directory of

the installation of the UIMA SDK, which can be imported into an Eclipse project for

your convenience, as described in Section 3.2, “Setting up Eclipse to view Example Code”.)

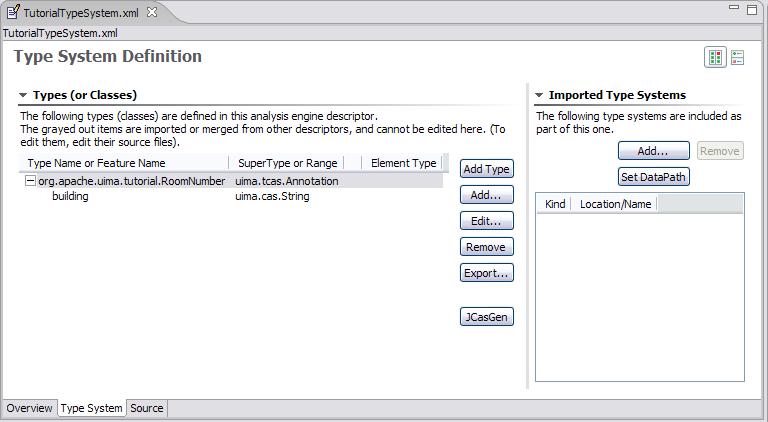

In Eclipse, expand the uimaj-examples project in the

Package Explorer view, and browse to the file

descriptors/tutorial/ex1/TutorialTypeSystem.xml.

Right-click on the file in the navigator and select Open With → Component

Descriptor Editor. Once the editor opens, click on the “Type System”

tab at the bottom of the editor window. You should see a view such as the

following:

Our annotator will need only one type –

org.apache.uima.tutorial.RoomNumber. (We use the same

namespace conventions as are used for Java classes.) Just as in Java, types have

supertypes. The supertype is listed in the second column of the left table. In this

case our RoomNumber annotation extends from the built-in type

uima.tcas.Annotation.

Descriptions can be included with types and features. In this example, there is a

description associated with the building feature. To see it,

hover the mouse over the feature.

The bottom tab labeled “Source” will show you the XML source file associated with this descriptor.

The built-in Annotation type declares three fields (called

Features in CAS terminology). The features begin

and end store the character offsets of the span of text to which the

annotation refers. The feature sofa (Subject of Analysis) indicates

which document the begin and end offsets point into. The sofa feature

can be ignored for now since we assume in this tutorial that the CAS contains only one

subject of analysis (document).

Our RoomNumber type will inherit these three features from

uima.tcas.Annotation, its supertype; they are not visible in

this view because inherited features are not shown. One additional feature,

building, is declared. It takes a String as its value. Instead

of String, we could have declared the range-type of our feature to be any other CAS type

(defined or built-in).

If you are not using Eclipse, if you need to edit the type system, do so using any XML or text editor, directly. The following is the actual XML representation of the Type System displayed above in the editor:

<?xml version="1.0" encoding="UTF-8" ?>

<typeSystemDescription xmlns="http://uima.apache.org/resourceSpecifier">

<name>TutorialTypeSystem</name>

<description>Type System Definition for the tutorial examples -

as of Exercise 1</description>

<vendor>Apache Software Foundation</vendor>

<version>1.0</version>

<types>

<typeDescription>

<name>org.apache.uima.tutorial.RoomNumber</name>

<description></description>

<supertypeName>uima.tcas.Annotation</supertypeName>

<features>

<featureDescription>

<name>building</name>

<description>Building containing this room</description>

<rangeTypeName>uima.cas.String</rangeTypeName>

</featureDescription>

</features>

</typeDescription>

</types>

</typeSystemDescription>

1.1.2. Generating Java Source Files for CAS Types

When you save a descriptor that you have modified, the Component Descriptor Editor will automatically generate Java classes corresponding to the types that are defined in that descriptor (unless this has been disabled), using a utility called JCasGen. These Java classes will have the same name (including package) as the CAS types, and will have get and set methods for each of the features that you have defined.

This feature is enabled/disabled using the UIMA menu pulldown (or the Eclipse Preferences → UIMA). If automatic running of JCasGen is not happening, please make sure the option is checked:

|

The Java class for the example org.apache.uima.tutorial.RoomNumber type can

be found in src/org/apache/uima/tutorial/RoomNumber.java

. You will see how to use these generated classes in the next section.

If you are not using the Component Descriptor Editor, you will need to generate

these Java classes by using the JCasGen tool. JCasGen reads a

Type System Descriptor XML file and generates the corresponding Java classes that

you can then use in your annotator code. To launch JCasGen, run the jcasgen shell

script located in the /bin directory of the UIMA SDK

installation. This should launch a GUI that looks something like this:

|

Use the “Browse” buttons to select your input file (TutorialTypeSystem.xml) and output directory (the root of the source tree into which you want the generated files placed). Then click the “Go” button. If the Type System Descriptor has no errors, new Java source files will be generated under the specified output directory.

There are some additional options to choose from when running JCasGen; please refer to the Chapter 8, JCasGen User's Guide for details.

1.1.3. Developing Your Annotator Code

Annotator implementations all implement a standard interface (AnalysisComponent), having several methods, the most important of which are:

-

initialize, -

process, and -

destroy.

initialize is called by the framework once when it first creates an instance of the

annotator class. process is called once per item being processed.

destroy may be called by the application when it is done using your annotator. There is a

default implementation of this interface for annotators using the JCas, called JCasAnnotator_ImplBase, which

has implementations of all required methods except for the process method.

Our annotator class extends the JCasAnnotator_ImplBase; most annotators that use the JCas will extend from this class, so they only have to implement the process method. This class is not restricted to handling just text; see Chapter 5, Annotations, Artifacts, and Sofas.

Annotators are not required to extend from the JCasAnnotator_ImplBase class; they may instead directly implement the AnalysisComponent interface, and provide all method implementations themselves. [1] This allows you to have your annotator inherit from some other superclass if necessary. If you would like to do this, see the Javadocs for JCasAnnotator for descriptions of the methods you must implement.

Annotator classes need to be public, cannot be declared abstract, and must have public, 0-argument constructors, so that they can be instantiated by the framework. [2] .

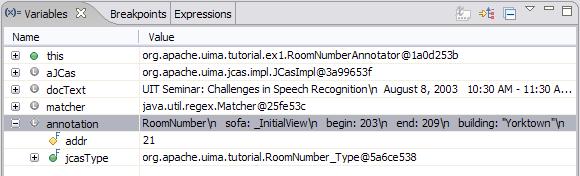

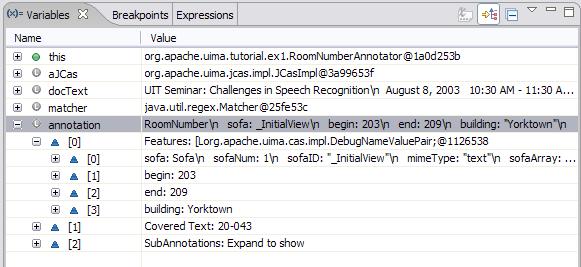

The class definition for our RoomNumberAnnotator implements the process method, and is shown here. You

can find the source for this in the

uimaj-examples/src/org/apache/uima/tutorial/ex1/RoomNumberAnnotator.java .

Note

In Eclipse, in the “Package Explorer” view, this will appear by default in the project

uimaj-examples, in the folder src, in the package

org.apache.uima.tutorial.ex1.

In Eclipse, open the RoomNumberAnnotator.java in the uimaj-examples project, under the src directory.

package org.apache.uima.tutorial.ex1;

import java.util.regex.Matcher;

import java.util.regex.Pattern;

import org.apache.uima.analysis_component.JCasAnnotator_ImplBase;

import org.apache.uima.jcas.JCas;

import org.apache.uima.tutorial.RoomNumber;

/**

* Example annotator that detects room numbers using

* Java 1.4 regular expressions.

*/

public class RoomNumberAnnotator extends JCasAnnotator_ImplBase {

private Pattern mYorktownPattern =

Pattern.compile("\\b[0-4]\\d-[0-2]\\d\\d\\b");

private Pattern mHawthornePattern =

Pattern.compile("\\b[G1-4][NS]-[A-Z]\\d\\d\\b");

public void process(JCas aJCas) {

// Discussed Later

}

}

The two Java class fields, mYorktownPattern and mHawthornePattern, hold regular expressions that will be used in the process method. Note that these two fields are part of the Java implementation of the annotator code, and not a part of the CAS type system. We are using the regular expression facility that is built into Java 1.4. It is not critical that you know the details of how this works, but if you are curious the details can be found in the Java API docs for the java.util.regex package.

The only method that we are required to implement is process. This method is typically

called once for each document that is being analyzed. This method takes one argument, which is a JCas instance;

this holds the document to be analyzed and all of the analysis results. [3]

public void process(JCas aJCas) {

// get document text

String docText = aJCas.getDocumentText();

// search for Yorktown room numbers

Matcher matcher = mYorktownPattern.matcher(docText);

int pos = 0;

while (matcher.find(pos)) {

// found one - create annotation

RoomNumber annotation = new RoomNumber(aJCas);

annotation.setBegin(matcher.start());

annotation.setEnd(matcher.end());

annotation.setBuilding("Yorktown");

annotation.addToIndexes();

pos = matcher.end();

}

// search for Hawthorne room numbers

matcher = mHawthornePattern.matcher(docText);

pos = 0;

while (matcher.find(pos)) {

// found one - create annotation

RoomNumber annotation = new RoomNumber(aJCas);

annotation.setBegin(matcher.start());

annotation.setEnd(matcher.end());

annotation.setBuilding("Hawthorne");

annotation.addToIndexes();

pos = matcher.end();

}

}

The Matcher class is part of the java.util.regex package and is used to find the room numbers in the document text. When we find one, recording the annotation is as simple as creating a new Java object and calling some set methods:

RoomNumber annotation = new RoomNumber(aJCas);

annotation.setBegin(matcher.start());

annotation.setEnd(matcher.end());

annotation.setBuilding("Yorktown");

The RoomNumber class was generated from the type system description by the

Component Descriptor Editor or the JCasGen tool, as discussed in the previous section.

Finally, we call annotation.addToIndexes() to add the new annotation to the

indexes maintained in the CAS. By default, the CAS implementation used for analysis of text documents keeps

an index of all annotations in their order from beginning to end of the document. Subsequent annotators or

applications use the indexes to iterate over the annotations.

Note

If you don't add the instance to the indexes, it cannot be retrieved by down-stream annotators, using the indexes.

Note

You can also call addToIndexes() on Feature Structures that are not subtypes of

uima.tcas.Annotation, but these will not be sorted in any particular way. If you want

to specify a sort order, you can define your own custom indexes in the CAS: see Chapter 4, CAS Reference and Section 2.4.1.7, “Index Definition” for details.

We're almost ready to test the RoomNumberAnnotator. There is just one more step remaining.

1.1.4. Creating the XML Descriptor

The UIMA architecture requires that descriptive information about an annotator be represented in an XML file and provided along with the annotator class file(s) to the UIMA framework at run time. This XML file is called an Analysis Engine Descriptor. The descriptor includes:

Name, description, version, and vendor

The annotator's inputs and outputs, defined in terms of the types in a Type System Descriptor

Declaration of the configuration parameters that the annotator accepts

The Component Descriptor Editor plugin, which we previously used to edit the Type System descriptor, can also be used to edit Analysis Engine Descriptors.

A descriptor for our RoomNumberAnnotator is provided with the UIMA

distribution under the name

descriptors/tutorial/ex1/RoomNumberAnnotator.xml. To

edit it in Eclipse, right-click on that file in the navigator and select Open With

→ Component Descriptor Editor.

Tip

In Eclipse, you can double click on the tab at the top of the Component Descriptor Editor's window identifying the currently selected editor, and the window will “Maximize”. Double click it again to restore the original size.

If you are not using Eclipse, you will need to edit Analysis Engine descriptors manually. See Section 1.8, “Analysis Engine XML Descriptor” for an introduction to the Analysis Engine descriptor XML syntax. The remainder of this section assumes you are using the Component Descriptor Editor plug-in to edit the Analysis Engine descriptor.

The Component Descriptor Editor consists of several tabbed pages; we will only need to use a few of them here. For more information on using this editor, see Chapter 1, Component Descriptor Editor User's Guide.

The initial page of the Component Descriptor Editor is the Overview page, which appears as follows:

|

This presents an overview of the RoomNumberAnnotator Analysis Engine (AE). The left side of the page shows that this descriptor is for a Primitive AE (meaning it consists of a single annotator), and that the annotator code is developed in Java. Also, it specifies the Java class that implements our logic (the code which was discussed in the previous section). Finally, on the right side of the page are listed some descriptive attributes of our annotator.

The other two pages that need to be filled out are the Type System page and the Capabilities page. You can switch to these pages using the tabs at the bottom of the Component Descriptor Editor. In the tutorial, these are already filled out for you.

The RoomNumberAnnotator will be using the TutorialTypeSystem we looked at in Section Section 1.1.1, “Defining Types”. To specify this, we add this type system to the Analysis Engine's list of Imported Type Systems, using the Type System page's right side panel, as shown here:

|

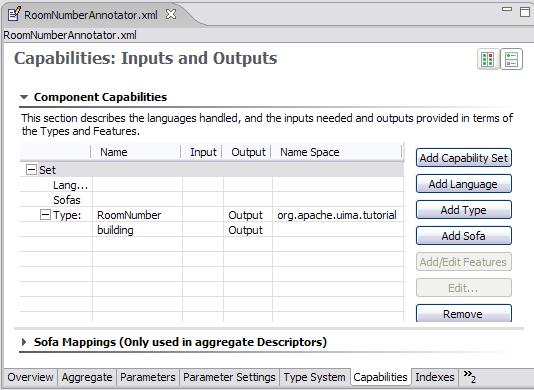

On the Capabilities page, we define our annotator's inputs and outputs, in terms of the types in the type system. The Capabilities page is shown below:

|

Although capabilities come in sets, having multiple sets is deprecated; here

we're just using one set. The RoomNumberAnnotator is very simple. It requires

no input types, as it operates directly on the document text -- which is supplied as a

part of the CAS initialization (and which is always assumed to be present). It

produces only one output type (RoomNumber), and it sets the value of the

building feature on that type. This is all represented on the

Capabilities page.

The Capabilities page has two other parts for specifying languages and Sofas. The languages section allows you to specify which languages your Analysis Engine supports. The RoomNumberAnnotator happens to be language-independent, so we can leave this blank. The Sofas section allows you to specify the names of additional subjects of analysis. This capability and the Sofa Mappings at the bottom are advanced topics, described in Chapter 5, Annotations, Artifacts, and Sofas.

This is all of the information we need to provide for a simple annotator. If you want to peek at the XML that this tool saves you from having to write, click on the “Source” tab at the bottom to view the generated XML.

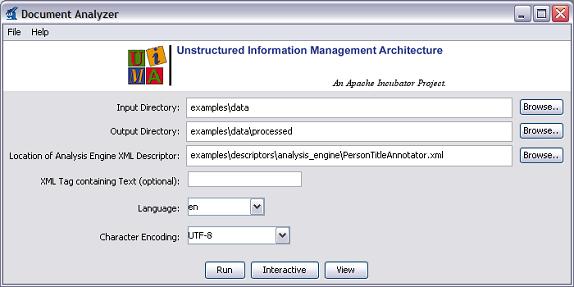

1.1.5. Testing Your Annotator

Having developed an annotator, we need a way to try it out on some example

documents. The UIMA SDK includes a tool called the Document Analyzer that will allow

us to do this. To run the Document Analyzer, execute the documentAnalyzer shell

script that is in the bin directory of your UIMA SDK

installation, or, if you are using the example Eclipse project, execute the

“UIMA Document Analyzer” run configuration supplied with that

project. (To do this, click on the menu bar Run → Run ... → and under Java

Applications in the left box, click on UIMA Document Analyzer.)

You should see a screen that looks like this:

|

There are six options on this screen:

Directory containing documents to analyze

Directory where analysis results will be written

The XML descriptor for the Analysis Engine (AE) you want to run

(Optional) an XML tag, within the input documents, that contains the text to be analyzed. For example, the value TEXT would cause the AE to only analyze the portion of the document enclosed within <TEXT>...</TEXT> tags.

Language of the document

Character encoding

Use the Browse button next to the third item to set the “Location of AE XML

Descriptor” field to the descriptor we've just been discussing

—

<where-you-installed-uima-e.g.UIMA_HOME>

/examples/descriptors/tutorial/ex1/RoomNumberAnnotator.xml

. Set the other fields to the values shown in the screen shot above (which should be the

default values if this is the first time you've run the Document Analyzer). Then

click the “Run” button to start processing.

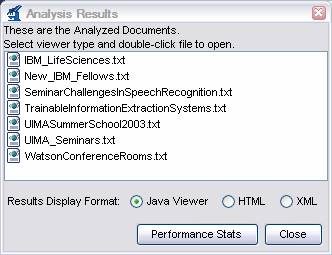

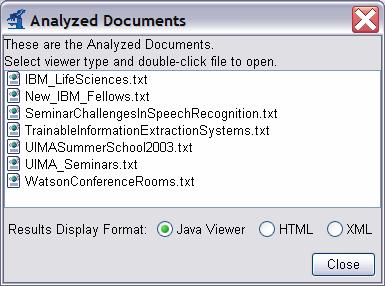

When processing completes, an “Analysis Results” window should appear.

|

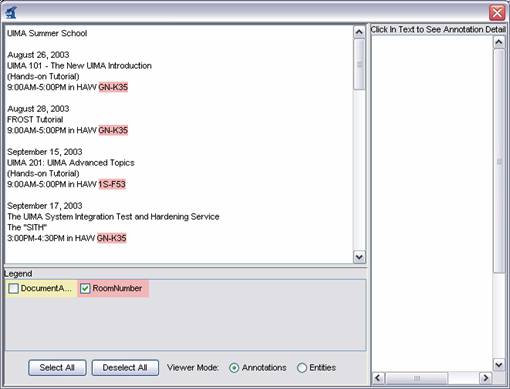

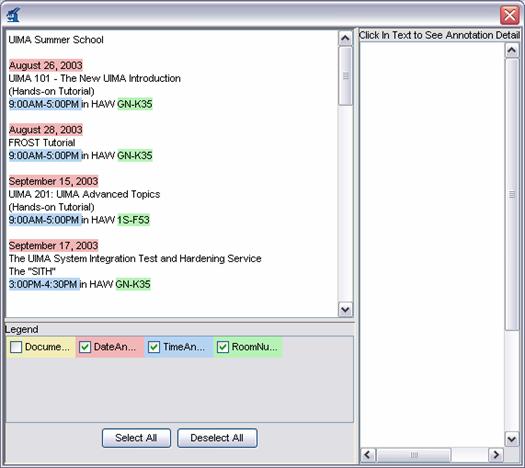

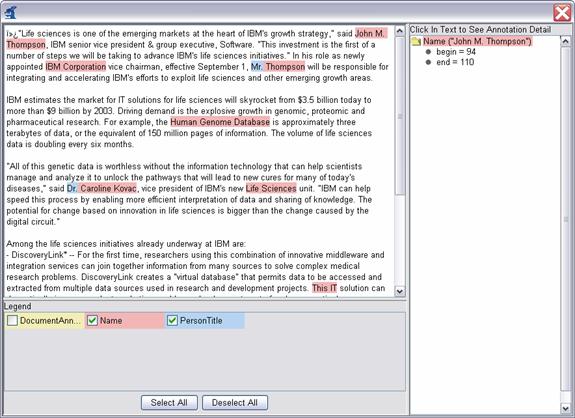

Make sure “Java Viewer” is selected as the Results Display Format, and double-click on the document UIMASummerSchool2003.txt to view the annotations that were discovered. The view should look something like this:

|

You can click the mouse on one of the highlighted annotations to see a list of all its features in the frame on the right.

Note

The legend will only show those types which have at least one instance in the CAS, and are declared as outputs in the capabilities section of the descriptor (see Section 1.1.4, “Creating the XML Descriptor”.

You can use the DocumentAnalyzer to test any UIMA annotator — just make sure that the annotator's classes are in the class path.

1.2. Configuration and Logging

1.2.1. Configuration Parameters

The example RoomNumberAnnotator from the previous section used hardcoded regular expressions and location names, which is obviously not very flexible. For example, you might want to have the patterns of room numbers be supplied by a configuration parameter, rather than having to redo the annotator's Java code to add additional patterns. Rather than add a new hardcoded regular expression for a new pattern, a better solution is to use configuration parameters.

UIMA allows annotators to declare configuration parameters in their descriptors. The descriptor also specifies default values for the parameters, though these can be overridden at runtime.

1.2.1.1. Declaring Parameters in the Descriptor

The example descriptor

descriptors/tutorial/ex2/RoomNumberAnnotator.xml is

the same as the descriptor from the previous section except that information has

been filled in for the Parameters and Parameter Settings pages of the Component

Descriptor Editor.

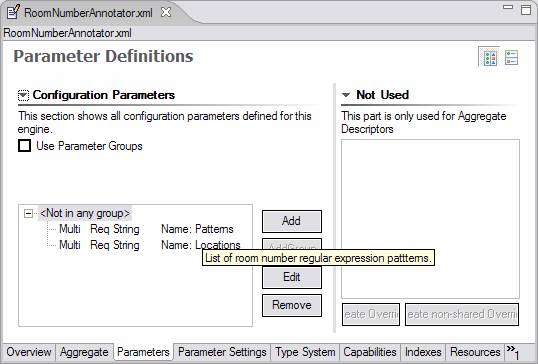

First, in Eclipse, open example two's RoomNumberAnnotator in the Component Descriptor Editor, and then go to the Parameters page (click on the parameters tab at the bottom of the window), which is shown below:

|

Two parameters – Patterns and Locations -- have been declared. In this screen shot, the mouse (not shown) is hovering over Patterns to show its description in the small popup window. Every parameter has the following information associated with it:

name – the name by which the annotator code refers to the parameter

description – a natural language description of the intent of the parameter

type – the data type of the parameter's value – must be one of String, Integer, Float, or Boolean.

multiValued – true if the parameter can take multiple-values (an array), false if the parameter takes only a single value. Shown above as

Multi.mandatory – true if a value must be provided for the parameter. Shown above as

Req(for required).

Both of our parameters are mandatory and accept an array of Strings as their value.

Next, default values are assigned to the parameters on the Parameter Settings page:

|

Here the “Patterns” parameter is selected, and the right pane

shows the list of values for this parameter, in this case the regular expressions

that match particular room numbering conventions. Notice the third pattern is

new, for matching the style of room numbers in the third building, which has room

numbers such as J2-A11.

1.2.1.2. Accessing Parameter Values from the Annotator Code

The class

org.apache.uima.tutorial.ex2.RoomNumberAnnotator has

overridden the initialize method. The initialize method is called by the UIMA

framework when the annotator is instantiated, so it is a good place to read

configuration parameter values. The default initialize method does nothing with

configuration parameters, so you have to override it. To see the code in Eclipse,

switch to the src folder, and open

org.apache.uima.tutorial.ex2. Here is the method

body:

/**

* @see AnalysisComponent#initialize(UimaContext)

*/

public void initialize(UimaContext aContext)

throws ResourceInitializationException {

super.initialize(aContext);

// Get config. parameter values

String[] patternStrings =

(String[]) aContext.getConfigParameterValue("Patterns");

mLocations =

(String[]) aContext.getConfigParameterValue("Locations");

// compile regular expressions

mPatterns = new Pattern[patternStrings.length];

for (int i = 0; i < patternStrings.length; i++) {

mPatterns[i] = Pattern.compile(patternStrings[i]);

}

}

Configuration parameter values are accessed through the UimaContext. As you will see in subsequent sections of this chapter, the UimaContext is the annotator's access point for all of the facilities provided by the UIMA framework – for example logging and external resource access.

The UimaContext's getConfigParameterValue

method takes the name of the parameter as an argument; this must match one of the

parameters declared in the descriptor. The return value of this method is a Java

Object, whose type corresponds to the declared type of the parameter. It is up to the

annotator to cast it to the appropriate type, String[] in this case.

If there is a problem retrieving the parameter values, the framework throws an exception. Generally annotators don't handle these, and just let them propagate up.

To see the configuration parameters working, run the Document Analyzer

application and select the descriptor

examples/descriptors/tutorial/ex2/RoomNumberAnnotator.xml

. In the example document WatsonConferenceRooms.txt, you

should see some examples of Hawthorne II room numbers that would not have been

detected by the ex1 version of RoomNumberAnnotator.

1.2.1.3. Supporting Reconfiguration

If you take a look at the Javadocs (located in the docs/api directory) for

org.apache.uima.analysis_component.AnaysisComponent

(which our annotator implements indirectly through JCasAnnotator_ImplBase),

you will see that there is a reconfigure() method, which is called by the containing

application through the UIMA framework, if the configuration parameter values

are changed.

The AnalysisComponent_ImplBase class provides a default implementation that just calls the annotator's destroy method followed by its initialize method. This works fine for our annotator. The only situation in which you might want to override the default reconfigure() is if your annotator has very expensive initialization logic, and you don't want to reinitialize everything if just one configuration parameter has changed. In that case, you can provide a more intelligent implementation of reconfigure() for your annotator.

1.2.1.4. Configuration Parameter Groups

For annotators with many sets of configuration parameters, UIMA supports organizing them into groups. It is possible to define a parameter with the same name in multiple groups; one common use for this is for annotators that can process documents in several languages and which want to have different parameter settings for the different languages.

The syntax for defining parameter groups in your descriptor is fairly

straightforward – see Chapter 2, Component Descriptor Reference for details. Values of

parameters defined within groups are accessed through the two-argument version

of UimaContext.getConfigParameterValue, which takes

both the group name and the parameter name as its arguments.

1.2.2. Logging

The UIMA SDK provides a logging facility, which is very similar to the java.util.logging.Logger class that was introduced in Java 1.4.

In the Java architecture, each logger instance is associated with a name. By convention, this name is often the fully qualified class name of the component issuing the logging call. The name can be referenced in a configuration file when specifying which kinds of log messages to actually log, and where they should go.

The UIMA framework supports this convention using the

UimaContext object. If you access a logger instance using

getContext().getLogger() within an Annotator, the logger

name will be the fully qualified name of the Annotator implementation class.

Here is an example from the process method of

org.apache.uima.tutorial.ex2.RoomNumberAnnotator:

getContext().getLogger().log(Level.FINEST,"Found: " + annotation);

The first argument to the log method is the level of the log output. Here, a value of FINEST indicates that this is a highly-detailed tracing message. While useful for debugging, it is likely that real applications will not output log messages at this level, in order to improve their performance. Other defined levels, from lowest to highest importance, are FINER, FINE, CONFIG, INFO, WARNING, and SEVERE.

If no logging configuration file is provided (see next section), the Java Virtual Machine defaults would be used, which typically set the level to INFO and higher messages, and direct output to the console.

If you specify the standard UIMA SDK Logger.properties,

the output will be directed to a file named uima.log, in the current working directory

(often the “project” directory when running from Eclipse, for

instance).

Note

When using Eclipse, the uima.log file, if written into the Eclipse workspace in the project uimaj-examples, for example, may not appear in the Eclipse package explorer view until you right-click the uimaj-examples project with the mouse, and select “Refresh”. This operation refreshes the Eclipse display to conform to what may have changed on the file system. Also, you can set the Eclipse preferences for the workspace to automatically refresh (Window → Preferences → General → Workspace, then click the “refresh automatically” checkbox.

1.2.2.1. Specifying the Logging Configuration

The standard UIMA logger uses the underlying Java 1.4 logging mechanism. You

can use the APIs that come with that to configure the logging. In addition, the

standard Java 1.4 logging initialization mechanisms will look for a Java System

Property named java.util.logging.config.file and if

found, will use the value of this property as the name of a standard

“properties” file, for setting the logging level. Please refer to

the Java 1.4. documentation for more information on the format and use of this

file.

Two sample logging specification property files can be found in the UIMA_HOME

directory where the UIMA SDK is installed:

config/Logger.properties, and

config/FileConsoleLogger.properties. These specify the same

logging, except the first logs just to a file, while the second logs both to a file and

to the console. You can edit these files, or create additional ones, as described

below, to change the logging behavior.

When running your own Java application, you can specify the location of the

logging configuration file on your Java command line by setting the Java system

property java.util.logging.config.file to be the logging

configuration filename. This file specification can be either absolute or

relative to the working directory. For example:

java "-Djava.util.logging.config.file=C:/Program Files/apache-uima/config/Logger.properties"

Note

In a shell script, you can use environment variables such as UIMA_HOME if convenient.

If you are using Eclipse to launch your application, you can set this property

in the VM arguments section of the Arguments tab of the run configuration screen. If

you've set an environment variable UIMA_HOME, you could for example, use the

string:

"-Djava.util.logging.config.file=${env_var:UIMA_HOME}/config/Logger.properties".

If you running the .bat or .sh files in the UIMA SDK's bin directory, you can specify the location of your

logger configuration file by setting the UIMA_LOGGER_CONFIG_FILE environment variable prior to running the script,

for example (on Windows):

set UIMA_LOGGER_CONFIG_FILE=C:/myapp/MyLogger.properties

1.2.2.2. Setting Logging Levels

Within the logging control file, the default global logging level specifies

which kinds of events are logged across all loggers. For any given facility this

global level can be overridden by a facility specific level. Multiple handlers are

supported. This allows messages to be directed to a log file, as well as to a

“console”. Note that the ConsoleHandler also has a separate level

setting to limit messages printed to the console. For example: .level=

INFO

The properties file can change where the log is written, as well.

Facility specific properties allow different logging for each class, as

well. For example, to set the com.xyz.foo logger to only log SEVERE messages:

com.xyz.foo.level = SEVERE

If you have a sample annotator in the package

org.apache.uima.SampleAnnotator you can set the log level

by specifying: org.apache.uima.SampleAnnotator.level =

ALL

There are other logging controls; for a full discussion, please read the

contents of the Logger.properties file and the Java

specification for logging in Java 1.4.

1.2.2.3. Format of logging output

The logging output is formatted by handlers specified in the properties file for configuring logging, described above. The default formatter that comes with the UIMA SDK formats logging output as follows:

Timestamp - threadID: sourceInfo: Message level:

message

Here's an example:

7/12/04 2:15:35 PM - 10:

org.apache.uima.util.TestClass.main(62): INFO: You are not logged

in!

1.2.2.4. Meaning of the logging severity levels

These levels are defined by the Java logging framework, which was incorporated into Java as of the 1.4 release level. The levels are defined in the Javadocs for java.util.logging.Level, and include both logging and tracing levels:

OFF is a special level that can be used to turn off logging.

ALL indicates that all messages should be logged.

CONFIG is a message level for configuration messages. These would typically occur once (during configuration) in methods like

initialize().INFO is a message level for informational messages, for example, connected to server IP: 192.168.120.12

WARNING is a message level indicating a potential problem.

SEVERE is a message level indicating a serious failure.

Tracing levels, typically used for debugging:

FINE is a message level providing tracing information, typically at a collection level (messages occurring once per collection).

FINER indicates a fairly detailed tracing message, typically at a document level (once per document).

FINEST indicates a highly detailed tracing message.

1.2.2.5. Using the logger outside of an annotator

An application using UIMA may want to log its messages using the same logging framework. This can be done by getting a reference to the UIMA logger, as follows:

Logger logger = UIMAFramework.getLogger(TestClass.class);

The optional class argument allows filtering by class (if the log handler supports this). If not specified, the name of the returned logger instance is “org.apache.uima”.

1.2.2.6. Changing the underlying UIMA logging implementation

By default the UIMA framework use, under the hood of the UIMA Logger interface, the Java logging framework to do logging. But it is possible to change the logging implementation that UIMA use from Java logging to an arbitrary logging system when specifying the system property

-Dorg.apache.uima.logger.class=<loggerClass>

when the UIMA framework is started.

The specified logger class must be available in the classpath and have to implement the

org.apache.uima.util.Logger interface.

UIMA also provides a logging implementation that use Apache Log4j instead of Java logging. To use Log4j you have to provide the Log4j jars in the classpath and your application must specify the logging configuration as shown below.

-Dorg.apache.uima.logger.class=<org.apache.uima.util.impl.Log4jLogger_impl>

1.3. Building Aggregate Analysis Engines

1.3.1. Combining Annotators

The UIMA SDK makes it very easy to combine any sequence of Analysis Engines to form an Aggregate Analysis Engine. This is done through an XML descriptor; no Java code is required!

If you go to the examples/descriptors/tutorial/ex3

folder (in Eclipse, it's in your uimaj-examples project, under the

descriptors/tutorial/ex3 folder), you will find a

descriptor for a TutorialDateTime annotator. This annotator detects dates and

times. To see what this annotator can do, try it out

using the Document Analyzer. If you are curious as to how this annotator works, the

source code is included, but it is not necessary to understand the code at this

time.

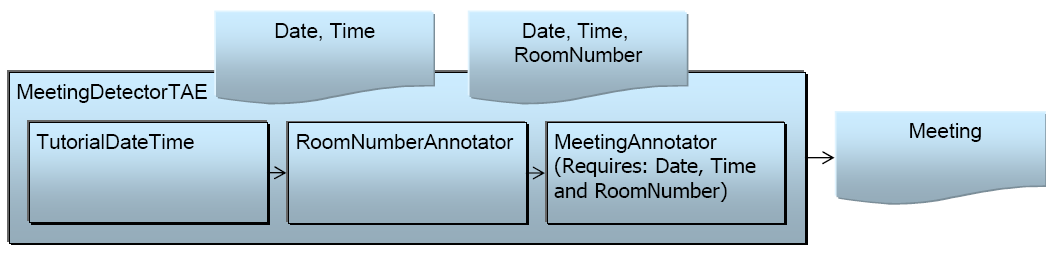

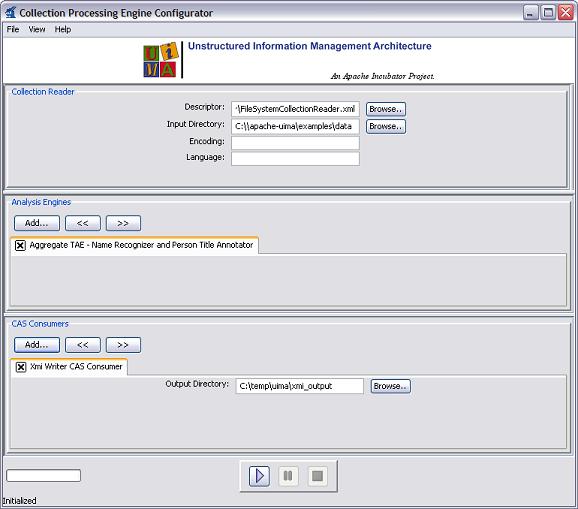

We are going to combine the TutorialDateTime annotator with the RoomNumberAnnotator to create an aggregate Analysis Engine. This is illustrated in the following figure:

The descriptor that does this is named

RoomNumberAndDateTime.xml, which you can open in the

Component Descriptor Editor plug-in. This is in the uimaj-examples project in the

folder descriptors/tutorial/ex3.

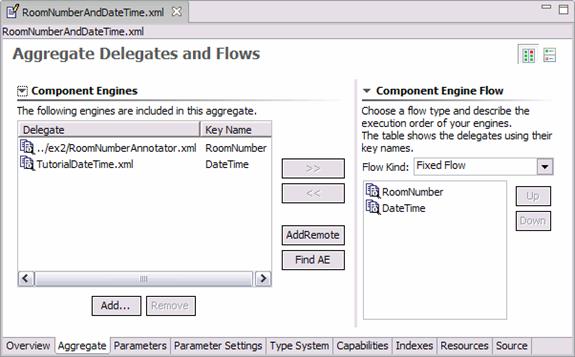

The “Aggregate” page of the Component Descriptor Editor is used to define which components make up the aggregate. A screen shot is shown below. (If you are not using Eclipse, see Section 1.8, “Analysis Engine XML Descriptor” for the actual XML syntax for Aggregate Analysis Engine Descriptors.)

|

On the left side of the screen is the list of component engines that make up the aggregate – in this case, the TutorialDateTime annotator and the RoomNumberAnnotator. To add a component, you can click the “Add” button and browse to its descriptor. You can also click the “Find AE” button and search for an Analysis Engine in your Eclipse workspace.

Note

The “AddRemote” button is used for adding components which run remotely (for example, on another machine using a remote networking connection). This capability is described in section Section 3.6.3, “Calling a UIMA Service”,

The order of the components in the left pane does not imply an order of

execution. The order of execution, or “flow” is determined in the

“Component Engine Flow” section on the right. UIMA supports

different types of algorithms (including user-definable) for determining the

flow. Here we pick the simplest: FixedFlow. We have chosen to

have the RoomNumberAnnotator execute first, although in this case it

doesn't really matter, since the RoomNumber and DateTime annotators do not

have any dependencies on one another.

If you look at the “Type System” page of the Component Descriptor Editor, you will see that it displays the type system but is not editable. The Type System of an Aggregate Analysis Engine is automatically computed by merging the Type Systems of all of its components.

Warning

If the components have different definitions for the same type name, The Component Descriptor Editor will show a warning. It is possible to continue past this warning, in which case your aggregate's type system will have the correct “merged” type definition that contains all of the features defined on that type by all of your components. However, it is not recommended to use this feature in conjunction with JCAS, since the JCAS Java Class definitions cannot be so easily merged. See Section 5.5, “Merging Types” for more information.

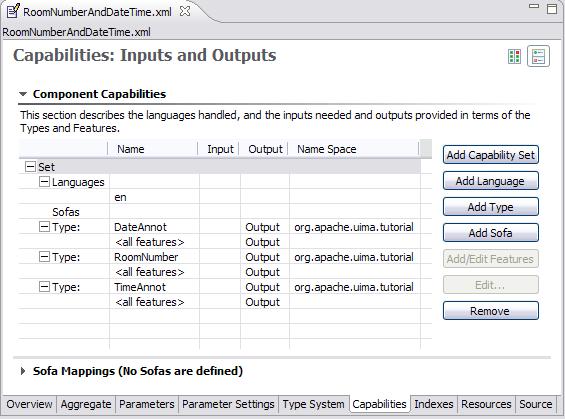

The Capabilities page is where you explicitly declare the aggregate Analysis Engine's inputs and outputs. Sofas and Languages are described later.

|

Note that it is not automatically assumed that all outputs of each component Analysis Engine (AE) are passed through as outputs of the aggregate AE. If, for example, the TutorialDateTime annotator also produced Word and Sentence annotations, but those were not of interest as output in this case, we can exclude them from the list of outputs.

You can run this AE using the Document Analyzer in the same way that you run any

other AE. Just select the examples/descriptors/tutorial/ex3/

RoomNumberAndDateTime.xml descriptor and click the Run button. You

should see that RoomNumbers, Dates, and Times are all shown:

|

1.3.2. AAEs can also contain CAS Consumers

In addition to aggregating Analysis Engines, Aggregates can also contain CAS

Consumers (see Chapter 2, Collection Processing Engine Developer's Guide, or even a mixture of these components with regular

Analysis Engines. The UIMA Examples has an example of an Aggregate which contains

both an analysis engine and a CAS consumer, in

examples/descriptors/MixedAggregate.xml.

Analysis Engines support the collectionProcessComplete

method, which is particularly important for many CAS Consumers. If

an application (or a Collection Processing Engine) calls

collectionProcessComplete no an aggregate, the framework

will deliver that call to all of the components of the aggregate. If you use

one of the built-in flow types (fixedFlow or capabilityLanguageFlow), then the

order specified in that flow will be the same order in which the

collectionProcessComplete calls are made to the components.

If a custom flow is used, then the calls will be made in arbitrary order.

1.3.3. Reading the Results of Previous Annotators

So far, we have been looking at annotators that look directly at the document text. However, annotators can also use the results of other annotators. One useful thing we can do at this point is look for the co-occurrence of a Date, a RoomNumber, and two Times – and annotate that as a Meeting.

The CAS maintains indexes of annotations, and from an index you can obtain an iterator that allows you to step through all annotations of a particular type. Here's some example code that would iterate over all of the TimeAnnot annotations in the JCas:

FSIndex timeIndex = aJCas.getAnnotationIndex(TimeAnnot.type);

Iterator timeIter = timeIndex.iterator();

while (timeIter.hasNext()) {

TimeAnnot time = (TimeAnnot)timeIter.next();

//do something

}

Note

You can also use the method

JCAS.getJFSIndexRepository().getAllIndexedFS(YourClass.type), which returns an iterator

over all instances of YourClass in no particular order. This can be useful for types

that are not subtypes of the built-in Annotation type and which therefore have no default sort order.

Also, if you've defined your own custom index as described in Section 2.4.1.7, “Index Definition”, you can get an iterator over that

specific index by calling aJCas.getJFSIndexRepository().getIndex(label).

The getIndex(...) method has also a 2 argument form; the second argument,

if used, specialized the index to subtype of the type the index was declared to index. For instance,

if you defined an index called "allEvents" over the type Event, and wanted

to get an index over just a particular subtype of event, say, TimeEvent,

you can ask for that index using

aJCas.getJFSIndexRepository().getIndex("allEvents", TimeEvent.type).

Now that we've explained the basics, let's take a look at the process method for

org.apache.uima.tutorial.ex4.MeetingAnnotator. Since we're looking for a

combination of a RoomNumber, a Date, and two Times, there are four nested iterators. (There's surely a

better algorithm for doing this, but to keep things simple we're just going to look at every combination

of the four items.)

For each combination of the four annotations, we compute the span of text that includes all of them, and then we check to see if that span is smaller than a “window” size, a configuration parameter. There are also some checks to make sure that we don't annotate the same span of text multiple times. If all the checks pass, we create a Meeting annotation over the whole span. There's really nothing to it!

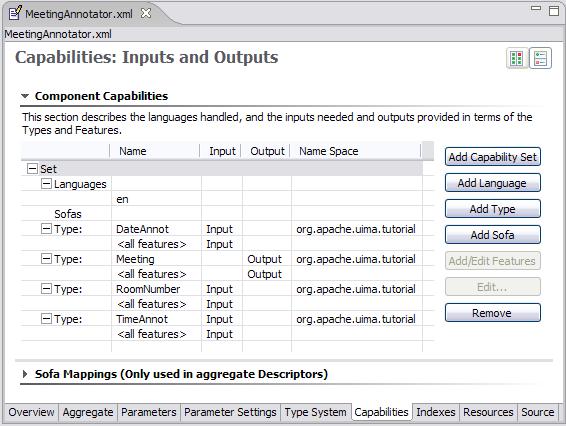

The XML descriptor, located in

examples/descriptors/tutorial/ex4/MeetingAnnotator.xml , is also very

straightforward. An important difference from previous descriptors is that this is the first annotator

we've discussed that has input requirements. This can be seen on the “Capabilities”

page of the Component Descriptor Editor:

|

If we were to run the MeetingAnnotator on its own, it wouldn't detect anything because it

wouldn't have any input annotations to work with. The required input annotations can be produced by the

RoomNumber and DateTime annotators. So, we create an aggregate Analysis Engine containing these two

annotators, followed by the Meeting annotator. This aggregate is illustrated in Figure 1.2, “An Aggregate Analysis Engine where an internal component uses output from previous

engines”. The descriptor for this is in

examples/descriptors/tutorial/ex4/MeetingDetectorAE.xml . Give it a try in the

Document Analyzer.

|

Figure 1.2. An Aggregate Analysis Engine where an internal component uses output from previous engines

1.4. Other examples

The UIMA SDK include several other examples you may find interesting, including

SimpleTokenAndSentenceAnnotator – a simple tokenizer and sentence annotator.

XmlDetagger – A multi-sofa annotator that does XML detagging. Multiple Sofas (Subjects of Analysis) are described in a later – see Chapter 6, Multiple CAS Views of an Artifact. Reads XML data from the input Sofa (named "xmlDocument"); this data can be stored in the CAS as a string or array, or it can be a URI to a remote file. The XML is parsed using the JVM's default parser, and the plain-text content is written to a new sofa called "plainTextDocument".

PersonTitleDBWriterCasConsumer – a sample CAS Consumer which populates a relational database with some annotations. It uses JDBC and in this example, hooks up with the Open Source Apache Derby database.

1.5. Additional Topics

1.5.1. Contract: Annotator Methods Called by the Framework

The UIMA framework ensures that an Annotator instance is called by only one thread at a time. An instance never has to worry about running some method on one thread, and then asynchronously being called using another thread. This approach simplifies the design of annotators – they do not have to be designed to support multi-threading. When multiple threading is wanted, for performance, multiple instances of the Annotator are created, each one running on just one thread.

The following table defines the methods called by the framework, when they are called, and the requirements annotator implementations must follow.

| Method | When Called by Framework | Requirements |

|---|---|---|

| initialize | Typically only called once, when instance is created. Can be called

again if application does a reinitialize call and the default behavior

isn't overridden (the default behavior for reinitialize is to call

destroy followed by

initialize | Normally does one-time initialization, including reading of configuration parameters. If the application changes the parameters, it can call initialize to have the annotator re-do its initialization. |

| typeSystemInit | Called before process whenever the type system

in the CAS being passed in differs from what was previously passed in a

process call (and called for the first CAS passed in,

too). The Type System being passed to an annotator only changes in the case of

remote annotators that are active as servers, receiving possibly

different type systems to operate on. | Typically, users of JCas do not implement any method for this. An annotator can use this call to read the CAS type system and setup any instance variables that make accessing the types and features convenient. |

| process | Called once for each CAS. Called by the application if not using Collection Processing Manager (CPM); the application calls the process method on the analysis engine, which is then delegated by the framework to all the annotators in the engine. For Collection Processing application, the CPM calls the process method. If the application creates and manages your own Collection Processing Engine via API calls (see Javadocs), the application calls this on the Collection Processing Engine, and it is delegated by the framework to the components. | Process the CAS, adding and/or modifying elements in it |

| destroy | This method can be called by applications, and is also called by the

Collection Processing Manager framework when the collection processing

completes. It is also called on Aggregate delegate components, if those

components successfully complete their initialize call, if

a subsequent delegate (or flow controller) in the aggregate fails to initialize.

This allows components which need to clean up things done during initialization

to do so. It is up to the component writer to use a try/finally construct during initialization

to cleanup from errors that occur during initialization within one component.

The destroy call on an aggregate is

propagated to all contained analysis engines. | An annotator should release all resources, close files, close database connections, etc., and return to a state where another initialize call could be received to restart. Typically, after a destroy call, no further calls will be made to an annotator instance. |

| reconfigure | This method is never called by the framework, unless an application calls it on the Engine object – in which case it the framework propagates it to all annotators contained in the Engine. Its purpose is to signal that the configuration parameters have changed. | A default implementation of this calls destroy, followed by initialize. This is the only case where initialize would be called more than once. Users should implement whatever logic is needed to return the annotator to an initialized state, including re-reading the configuration parameter data. |

1.5.2. Reporting errors from Annotators

There are two broad classes of errors that can occur: recoverable and unrecoverable. Because Annotators are often expected to process very large numbers of artifacts (for example, text documents), they should be written to recover where possible.

For example, if an upstream annotator created some input for an annotator which is invalid, the annotator may want to log this event, ignore the bad input and continue. It may include a notification of this event in the CAS, for further downstream annotators to consider. Or, it may throw an exception (see next section) – but in this case, it cannot do any further processing on that document.

Note

The choice of what to do can be made configurable, using the configuration parameters.

1.5.3. Throwing Exceptions from Annotators

Let's say an invalid regular expression was passed as a parameter to the RoomNumberAnnotator. Because this is an error related to the overall configuration, and not something we could expect to ignore, we should throw an appropriate exception, and most Java programmers would expect to do so like this:

throw new ResourceInitializationException(

"The regular expression " + x + " is not valid.");

UIMA, however, does not do it this way. All UIMA exceptions are

internationalized, meaning that they support translation

into other languages. This is accomplished by eliminating hardcoded message

strings and instead using external message digests. Message digests are files

containing (key, value) pairs. The key is used in the Java code instead of the actual

message string. This allows the message string to be easily translated later by

modifying the message digest file, not the Java code. Also, message strings in the

digest can contain parameters that are filled in when the exception is thrown. The

format of the message digest file is described in the Javadocs for the Java class

java.util.PropertyResourceBundle and in the load method of

java.util.Properties.

The first thing an annotator developer must choose is what Exception class to use. There are three to choose from:

ResourceConfigurationException should be thrown from the annotator's reconfigure() method if invalid configuration parameter values have been specified.

ResourceInitializationException should be thrown from the annotator's initialize() method if initialization fails for any reason (including invalid configuration parameters).

AnalysisEngineProcessException should be thrown from the annotator's process() method if the processing of a particular document fails for any reason.

Generally you will not need to define your own custom exception classes, but if you do they must extend one of these three classes, which are the only types of Exceptions that the annotator interface permits annotators to throw.

All of the UIMA Exception classes share common constructor varieties. There are four possible arguments:

The name of the message digest to use (optional – if not specified the default UIMA message digest is used).

The key string used to select the message in the message digest.

An object array containing the parameters to include in the message. Messages can have substitutable parts. When the message is given, the string representation of the objects passed are substituted into the message. The object array is often created using the syntax new Object[]{x, y}.

Another exception which is the “cause” of the exception you are throwing. This feature is commonly used when you catch another exception and rethrow it. (optional)

If you look at source file (folder: src in Eclipse)

org.apache.uima.tutorial.ex5.RoomNumberAnnotator, you

will see the following code:

try {

mPatterns[i] = Pattern.compile(patternStrings[i]);

}

catch (PatternSyntaxException e) {

throw new ResourceInitializationException(

MESSAGE_DIGEST, "regex_syntax_error",

new Object[]{patternStrings[i]}, e);

}

where the MESSAGE_DIGEST constant has the value

"org.apache.uima.tutorial.ex5.RoomNumberAnnotator_Messages".

Message digests are specified using a dotted name, just like Java classes. This

file, with the .properties extension, must be present in the class path. In Eclipse,

you find this file under the src folder, in the package

org.apache.uima.tutorial.ex5, with the name

RoomNumberAnnotator_Messages.properties. Outside of Eclipse, you can find this

in the uimaj-examples.jar with the name

org/apache/uima/tutorial/ex5/RoomNumberAnnotator_Messages.properties.

If you look in this file you will see the line:

regex_syntax_error = {0} is not a valid regular expression.which is the error message for the example exception we showed above. The placeholder {0} will be filled by the toString() value of the argument passed to the exception constructor – in this case, the regular expression pattern that didn't compile. If there were additional arguments, their locations in the message would be indicated as {1}, {2}, and so on.

If a message digest is not specified in the call to the exception constructor, the

default is UIMAException.STANDARD_MESSAGE_CATALOG (whose

value is “org.apache.uima.UIMAException_Messages

” in the current release but may change). This message digest is located in the

uima-core.jar file at

org/apache/uima/UIMAException_messages.properties

– you can take a look to see if any of these exception messages are useful to

use.

To try out the regex_syntax_error exception, just use the Document Analyzer to

run

examples/descriptors/tutorial/ex5/RoomNumberAnnotator.xml

, which happens to have an invalid regular expression in its configuration parameter

settings.

To summarize, here are the steps to take if you want to define your own exception message:

Create a file with the .properties extension, where you declare message keys and their associated messages, using the same syntax as shown above for the regex_syntax_error exception. The properties file syntax is more completely described in the Javadocs for the load method of the java.util.Properties class.

Put your properties file somewhere in your class path (it can be in your annotator's .jar file).

Define a String constant (called MESSAGE_DIGEST for example) in your annotator

code whose value is the dotted name of this properties file. For example, if your

properties file is inside your jar file at the location

org/myorg/myannotator/Messages.properties, then this

String constant should have the value

org.myorg.myannotator.Messages. Do not include the

.properties extension. In Java Internationalization terminology, this is called

the Resource Bundle name. For more information see the Javadocs for the

PropertyResourceBundle class.

In your annotator code, throw an exception like this:

throw new ResourceInitializationException(

MESSAGE_DIGEST, "your_message_name",

new Object[]{param1,param2,...});

You may also wish to look at the Javadocs for the UIMAException class.

For more information on Java's internationalization features, see the Java Internationalization Guide.

1.5.4. Accessing External Resources

External Resources are Java objects that have a life cycle where they are (optionally) initialized at startup time by reading external data from a file or via a URL (which can access information over the http protocol, for instance). It is not required that Extermal Resource objects do any external data reading to initialize themselves. However, this is such a common use case, that we will presume this mode of operation in the description below.

Sometimes you may want an annotator to read from an external resource, such as a URL or a file – for example, a long list of keys and values that you are going to build into a HashMap. You could, of course, just introduce a configuration parameter that holds the absolute path or URL to this resource, and build the HashMap in your annotator's initialize method. However, this is not the best solution for three reasons:

Including an absolute path in your descriptor to specify the initialization data makes your annotator difficult for others to use. Each user will need to edit this descriptor and set the absolute path to a value appropriate for his or her installation.

You cannot share the created Java object(s), e.g., a HashMap, between multiple annotators. Also, in some deployment scenarios there may be more than one instance of your annotator, and you would like to have the option for them to share the same Java Object(s).

Your annotator would become dependent on a particular implementation of the Java Object(s). It would be better if there was a decoupling between the actual implementation, and the API used to access it.

A better way to create these sharable Java objects and initialize them via external disk or URL sources is through the ResourceManager component. In this section we are going to show an example of how to use the Resource Manager.

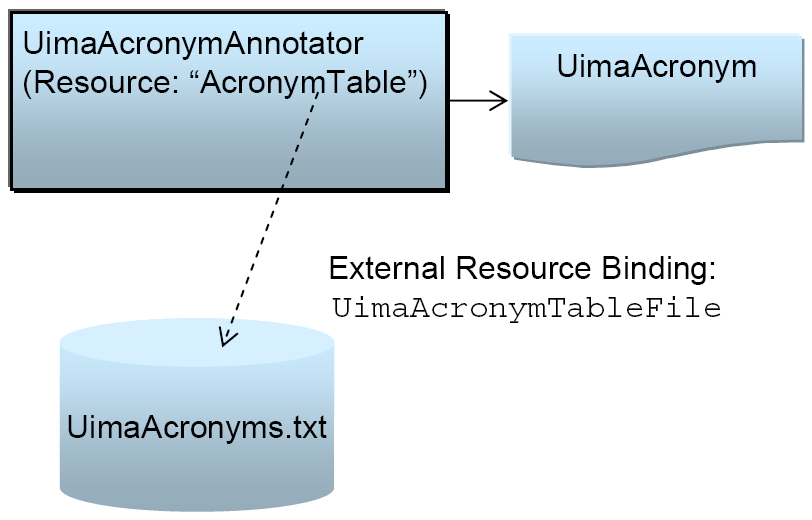

This example annotator will annotate UIMA acronyms (e.g. UIMA, AE, CAS, JCas) and store the acronym's expanded form as a feature of the annotation. The acronyms and their expanded forms are stored in an external file.

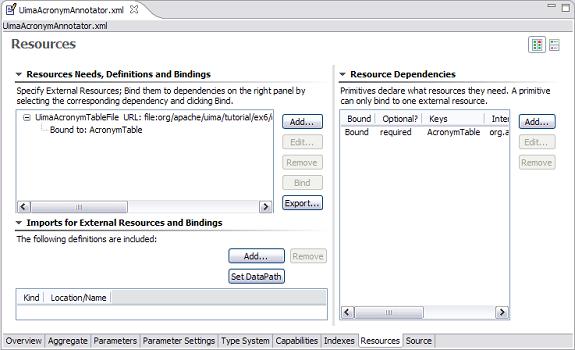

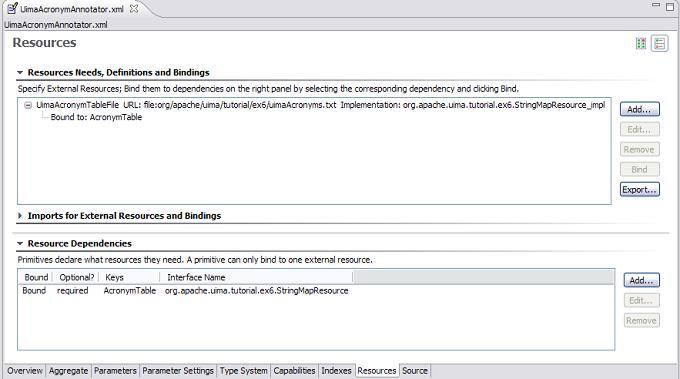

First, look at the

examples/descriptors/tutorial/ex6/UimaAcronymAnnotator.xml

descriptor.

|

The values of the rows in the two tables are longer than can be easily shown. You can click the small button at the top right to shift the layout from two side-by-side tables, to a vertically stacked layout. You can also click the small twisty on the “Imports for External Resources and Bindings” to collapse this section, because it's not used here. Then the same screen will appear like this:

|

The top window has a scroll bar allowing you to see the rest of the line.

1.5.4.1. Declaring Resource Dependencies

The bottom window is where an annotator declares an external resource dependency. The XML for this is as follows:

<externalResourceDependency>

<key>AcronymTable</key>

<description>Table of acronyms and their expanded forms.</description>

<interfaceName>

org.apache.uima.tutorial.ex6.StringMapResource

</interfaceName>

</externalResourceDependency>

The <key> value (AcronymTable) is the name by which the annotator

identifies this resource. The key must be unique for all resources that this

annotator accesses, but the same key could be used by different annotators to mean

different things. The interface name

(org.apache.uima.tutorial.ex6.StringMapResource) is

the Java interface through which the annotator accesses the data. Specifying an

interface name is optional. If you do not specify an interface name, annotators

will instead get an interface which can provide direct access to the

data resource (file or URL) that is

associated with this external resource.

1.5.4.2. Accessing the Resource from the UimaContext

If you look at the

org.apache.uima.tutorial.ex6.UimaAcronymAnnotator

source, you will see that the annotator accesses this resource from the

UimaContext by calling:

StringMapResource mMap =

(StringMapResource)getContext().getResourceObject("AcronymTable");

The object returned from the getResourceObject method

will implement the interface declared in the

<interfaceName> section of the descriptor,

StringMapResource in this case. The annotator code does not

need to know the location of external data that may be used to initilize this

object, nor the Java class that might be used to read the

data and implement the StringMapResource

interface.

Note that if we did not specify a Java interface in our descriptor, our annotator could directly access the resource data as follows:

InputStream stream = getContext().getResourceAsStream("AcronymTable");

If necessary, the annotator could also determine the location of the resource file, by calling:

URI uri = getContext().getResourceURI("AcronymTable");

These last two options are only available in the case where the descriptor does not declare a Java interface.

Note

The methods for getting access to resources include getResourceURL. That

method returns a URL, which may contain spaces encoded as %20. url.getPath() would

return the path without decoding these %20 into spaces. getResourceURI

on the other hand, returns a URI, and the uri.getPath() does

do the conversion of %20 into spaces. See also getResourceFilePath,

which does a getResourceURI followed by uri.getPath().

1.5.4.3. Declaring Resources and Bindings

Refer back to the top window in the Resources page of the Component Descriptor Editor. This is where we specify the location of the resource data, and the Java class used to read the data. For the example, this corresponds to the following section of the descriptor:

<resourceManagerConfiguration>

<externalResources>

<externalResource>

<name>UimaAcronymTableFile</name>

<description>

A table containing UIMA acronyms and their expanded forms.

</description>

<fileResourceSpecifier>

<fileUrl>file:org/apache/uima/tutorial/ex6/uimaAcronyms.txt

</fileUrl>

</fileResourceSpecifier>

<implementationName>

org.apache.uima.tutorial.ex6.StringMapResource_impl

</implementationName>

</externalResource>

</externalResources>

<externalResourceBindings>

<externalResourceBinding>

<key>AcronymTable</key>

<resourceName>UimaAcronymTableFile</resourceName>

</externalResourceBinding>

</externalResourceBindings>

</resourceManagerConfiguration>

The first section of this XML declares an externalResource, the

UimaAcronymTableFile. With this, the fileUrl element

specifies the path to the data file. This can be a file on the file system,

but can also be a remote resource access via, e.g., the http protocol.

The fileUrl element doesn't have to be a "file", it can be a URL.

This can be an absolute URL (e.g. one that starts

with file:/ or file:///, or file://my.host.org/), but that is not recommended

because it makes installation of your component more difficult, as noted earlier.

Better is a relative URL, which will be looked up within the classpath (and/or

datapath), as used in this example. In this case, the file

org/apache/uima/tutorial/ex6/uimaAcronyms.txt is

located in uimaj-examples.jar, which is in the classpath.

If you look in this file you will see the definitions of several UIMA

acronyms.

The second section of the XML declares an externalResourceBinding, which

connects the key AcronymTable, declared in the

annotator's external resource dependency, to the actual resource name

UimaAcronymTableFile. This is rather trivial in this case;

for more on bindings see the example

UimaMeetingDetectorAE.xml below. There is no global

repository for external resources; it is up to the user to define each resource

needed by a particular set of annotators.

In the Component Descriptor Editor, bindings are indicated below the

external resource. To create a new binding, you select an external resource (which

must have previously been defined), and an external resource dependency, and then

click the Bind button, which only enables if you have

selected two things to bind together.

When the Analysis Engine is initialized, it creates a single instance of

StringMapResource_impl and loads it with the contents of

the data file. This means that the framework calls the instance's load

method, passing it an instance of DataResource, from which you can obtain

a stream or URI/URL of the external resource that was declared in the external resource;

for resources where

loading does not make sense, you can implement a load method

which ignores its argument and just returns, or performes whatever

initialization is appropriate at startup time. See the Javadocs for

SharedResourceObject for details on this.

The UimaAcronymAnnotator then accesses the data through the

StringMapResource interface. This single instance could

be shared among multiple annotators, as will be explained later.

Warning

Because the implementation of the resource is shared, you should insure your implementation is thread-safe, as it could be called multiple times on multiple threads, simultaneously.

Note that all resource implementation classes (e.g. StringMapResource_impl in the provided example) must be declared public must not be declared abstract, and must have public, 0-argument constructors, so that they can be instantiated by the framework. (Although Java classes in which you do not define any constructor will, by default, have a 0-argument constructor that doesn't do anything, a class in which you have defined at least one constructor does not get a default 0-argument constructor.)

All resource implementation classes that provide access to resource data

must also implement the interface org.apache.uima.resource.SharedResourceObject.

The UIMA Framework

will invoke this interface's only method, load,

after this object has been instantiated. The implementation of this method

can then read data from the specified DataResource

and use that data to initialize this object. It can also do whatever

resource initialization might be appropriate to do at startup time.

This annotator is illustrated in Figure 1.3, “External Resource Binding”. To see it in action, just run it using the Document Analyzer. When it finishes, open up the UIMA_Seminars document in the processed results window, (double-click it), and then left-click on one of the highlighted terms, to see the expandedForm feature's value.

By designing our annotator in this way, we have gained some flexibility. We can freely replace the StringMapResource_impl class with any other implementation that implements the simple StringMapResource interface. (For example, for very large resources we might not be able to have the entire map in memory.) We have also made our external resource dependencies explicit in the descriptor, which will help others to deploy our annotator.

1.5.4.4. Sharing Resources among Annotators

Another advantage of the Resource Manager is that it allows our data to be shared between annotators. To demonstrate this we have developed another annotator that will use the same acronym table. The UimaMeetingAnnotator will iterate over Meeting annotations discovered by the Meeting Detector we previously developed and attempt to determine whether the topic of the meeting is related to UIMA. It will do this by looking for occurrences of UIMA acronyms in close proximity to the meeting annotation. We could implement this by using the UimaAcronymAnnotator, of course, but for the sake of this example we will have the UimaMeetingAnnotator access the acronym map directly.

The Java code for the UimaMeetingAnnotator in example 6 creates a new type, UimaMeeting, if it finds a meeting within 50 characters of the UIMA acronym.

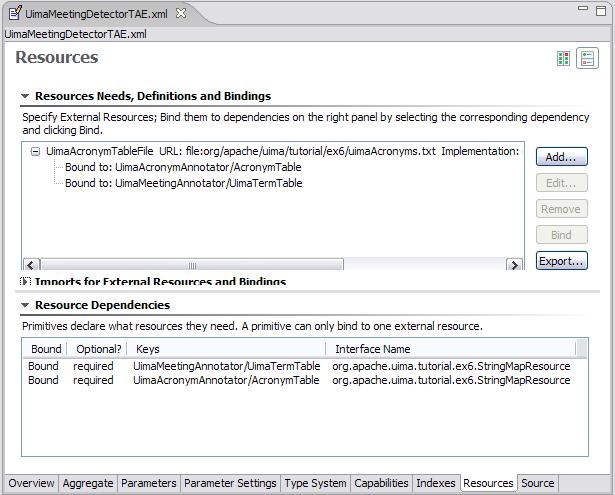

We combine three analysis engines, the UimaAcronymAnnotator to annotate UIMA acronyms, the MeetingDectector from example 4 to find meetings and finally the UimaMeetingAnnotator to annotate just meetings about UIMA. Together these are assembled to form the new aggregate analysis engine, UimaMeetingDectector. This aggregate and the sharing of a common resource are illustrated in Figure 1.4, “Component engines of an aggregate share a common resource”.

The important thing to notice is in the

UimaMeetingDetectorAE.xml aggregate descriptor. It

includes both the UimaMeetingAnnotator and the UimaAcronymAnnotator, and

contains a single declaration of the UimaAcronymTableFile resource. (The actual

example has the order of the first two annotators reversed versus the above

picture, which is OK since they do not depend on one another).

It also binds the resources as follows:

|

<externalResourceBindings>

<externalResourceBinding>

<key>UimaAcronymAnnotator/AcronymTable</key>

<resourceName>UimaAcronymTableFile</resourceName>

</externalResourceBinding>

<externalResourceBinding>

<key>UimaMeetingAnnotator/UimaTermTable</key>

<resourceName>UimaAcronymTableFile</resourceName>

</externalResourceBinding>

</externalResourceBindings>

This binds the resource dependencies of both the UimaAcronymAnnotator (which uses the name AcronymTable) and UimaMeetingAnnotator (which uses UimaTermTable) to the single declared resource named UimaAcronymFile. Therefore they will share the same instance. Resource bindings in the aggregate descriptor override any resource declarations in individual annotator descriptors.

If we wanted to have the annotators use different acronym tables, we could easily do that. We would simply have to change the resourceName elements in the bindings so that they referred to two different resources. The Resource Manager gives us the flexibility to make this decision at deployment time, without changing any Java code.

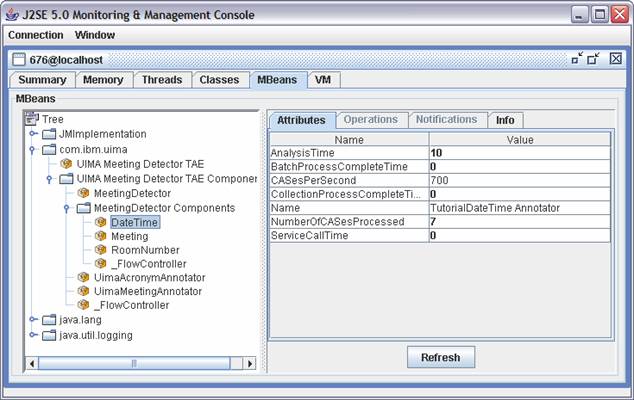

1.5.4.5. Threading and Shared Resources