Public Types |

typedef

TAO_ESF_Connected_Command

< TAO_ESF_Delayed_Changes

< PROXY, COLLECTION, ITERATOR,

ACE_SYNCH_USE >, PROXY > | Connected_Command |

typedef

TAO_ESF_Reconnected_Command

< TAO_ESF_Delayed_Changes

< PROXY, COLLECTION, ITERATOR,

ACE_SYNCH_USE >, PROXY > | Reconnected_Command |

typedef

TAO_ESF_Disconnected_Command

< TAO_ESF_Delayed_Changes

< PROXY, COLLECTION, ITERATOR,

ACE_SYNCH_USE >, PROXY > | Disconnected_Command |

typedef

TAO_ESF_Shutdown_Command

< TAO_ESF_Delayed_Changes

< PROXY, COLLECTION, ITERATOR,

ACE_SYNCH_USE > > | Shutdown_Command |

Public Member Functions |

| | TAO_ESF_Delayed_Changes (void) |

| | TAO_ESF_Delayed_Changes (const COLLECTION &collection) |

| int | busy (void) |

| int | idle (void) |

| int | execute_delayed_operations (void) |

| void | connected_i (PROXY *proxy) |

| void | reconnected_i (PROXY *proxy) |

| void | disconnected_i (PROXY *proxy) |

| void | shutdown_i (void) |

| virtual void | for_each (TAO_ESF_Worker< PROXY > *worker) |

| virtual void | connected (PROXY *proxy) |

| virtual void | reconnected (PROXY *proxy) |

| virtual void | disconnected (PROXY *proxy) |

| | Remove an element from the collection.

|

| virtual void | shutdown (void) |

| | The EC is shutting down, must release all the elements.

|

Private Types |

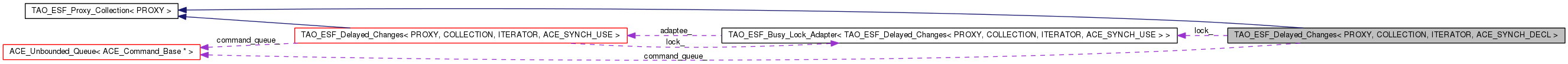

typedef

TAO_ESF_Busy_Lock_Adapter

< TAO_ESF_Delayed_Changes

< PROXY, COLLECTION, ITERATOR,

ACE_SYNCH_USE > > | Busy_Lock |

Private Attributes |

| COLLECTION | collection_ |

| Busy_Lock | lock_ |

| ACE_SYNCH_MUTEX_T | busy_lock_ |

| ACE_SYNCH_CONDITION_T | busy_cond_ |

| CORBA::ULong | busy_count_ |

| CORBA::ULong | write_delay_count_ |

| CORBA::ULong | busy_hwm_ |

| | Control variables for the concurrency policies.

|

| CORBA::ULong | max_write_delay_ |

ACE_Unbounded_Queue

< ACE_Command_Base * > | command_queue_ |

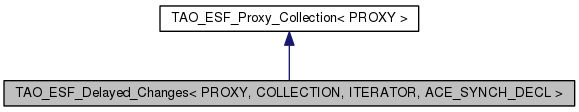

template<class PROXY, class COLLECTION, class ITERATOR, ACE_SYNCH_DECL>

class TAO_ESF_Delayed_Changes< PROXY, COLLECTION, ITERATOR, ACE_SYNCH_DECL >

TAO_ESF_Delayed_Operations.

This class implements the Delayed Operations protocol to solve the concurrency challenges outlined in the documentation of TAO_ESF_Proxy_Collection. In short the class delays changes by putting them on an "operation queue", the operations are stored as command objects in this queue and executed once the system is quiescent (i.e. no threads are iterating over the collection). The algorithm implemented so far is:

- If a thread is using the set then it increases the busy count, this is done by calling the busy() method. Once the thread has stopped using the collection the idle() method is invoked and the busy count is decreased. A helper class (Busy_Lock) is used to hide this protocol behind the familiar GUARD idiom.

- If the busy count reaches the busy_hwm then the thread must wait until the count reaches 0 again. This can be used to control the maximum concurrency in the EC, matching it (for example) with the number of processors. Setting the concurrency to a high value (say one million) allows for an arbitrary number of threads to execute concurrently.

- If a modification is posted to the collection we need to execute it at some point. Just using the busy_hwm would not work, the HWM may not be reached ever, so another form of control is needed. Instead we use another counter, that keeps track of how many threads have used the set since the modification was posted. If this number of threads reaches max_write_delay then we don't allow any more threads to go in, eventually the thread count reaches 0 and we can proceed with the operations.

- There is one aspect of concurrency that can be problematic: if thread pushes events as part of an upcall then the same thread could be counted twice, we need to keep track of the threads that are dispatching events and not increase (or decrease) the reference count when a thread iterates twice over the same set. This solves the major problems, but there are other issues to be addressed: + How do we ensure that the operations are eventually executed? + How do we simplify the execution of the locking protocol for clients of this class? + How do we minimize overhead for single threaded execution? + How do we minimize the overhead for the cases where the threads dispatching events don't post changes to the collection?

1.7.5.1

1.7.5.1